To scrape data effectively, you need the right tools. Here are some commonly used libraries and frameworks:

BeautifulSoup: Ideal for parsing HTML and extracting data.

Scrapy: A powerful and flexible framework for large-scale web scraping.

Selenium: Useful for scraping dynamic websites that use JavaScript.

Requests: A simple library for making HTTP requests.

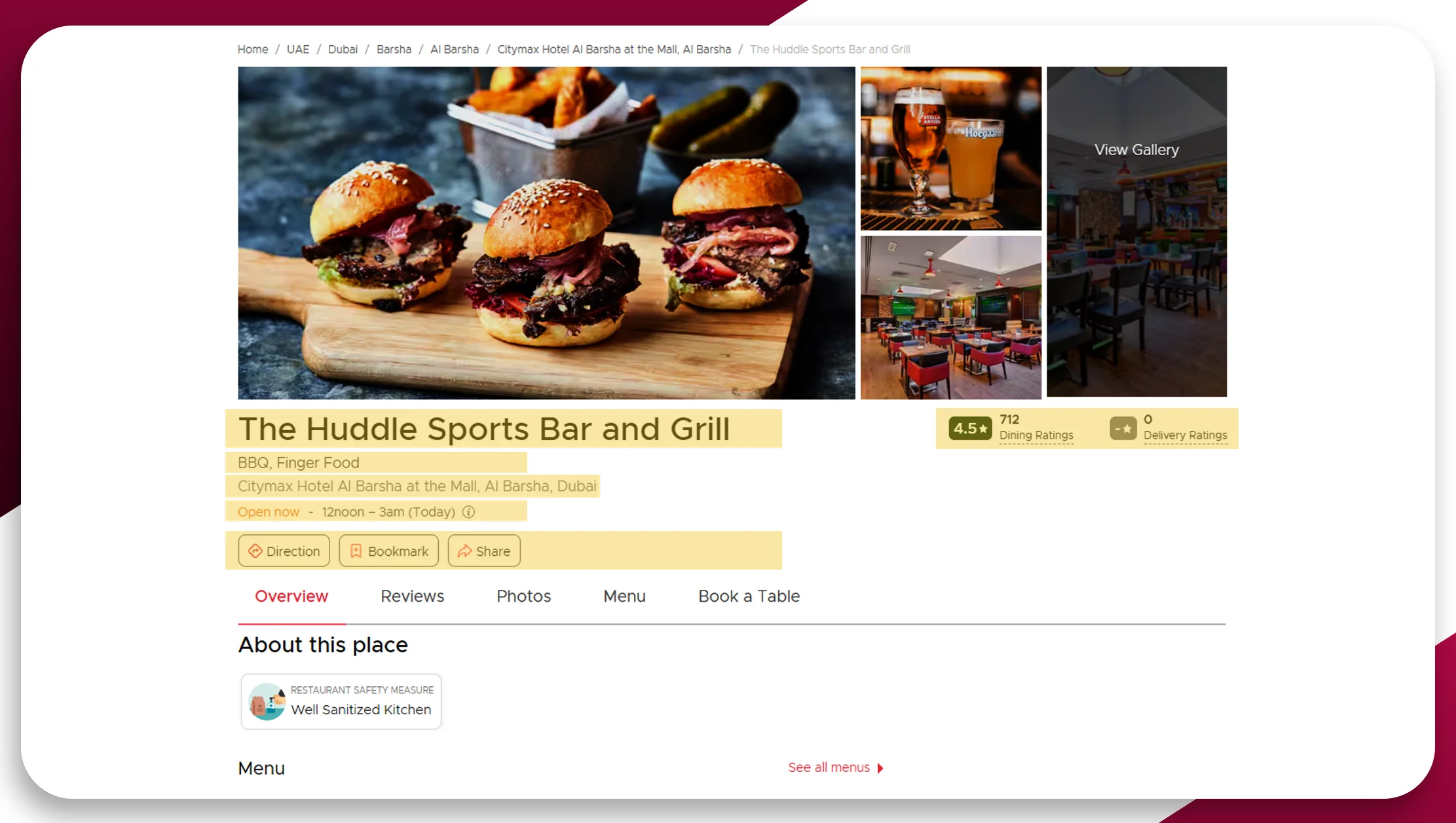

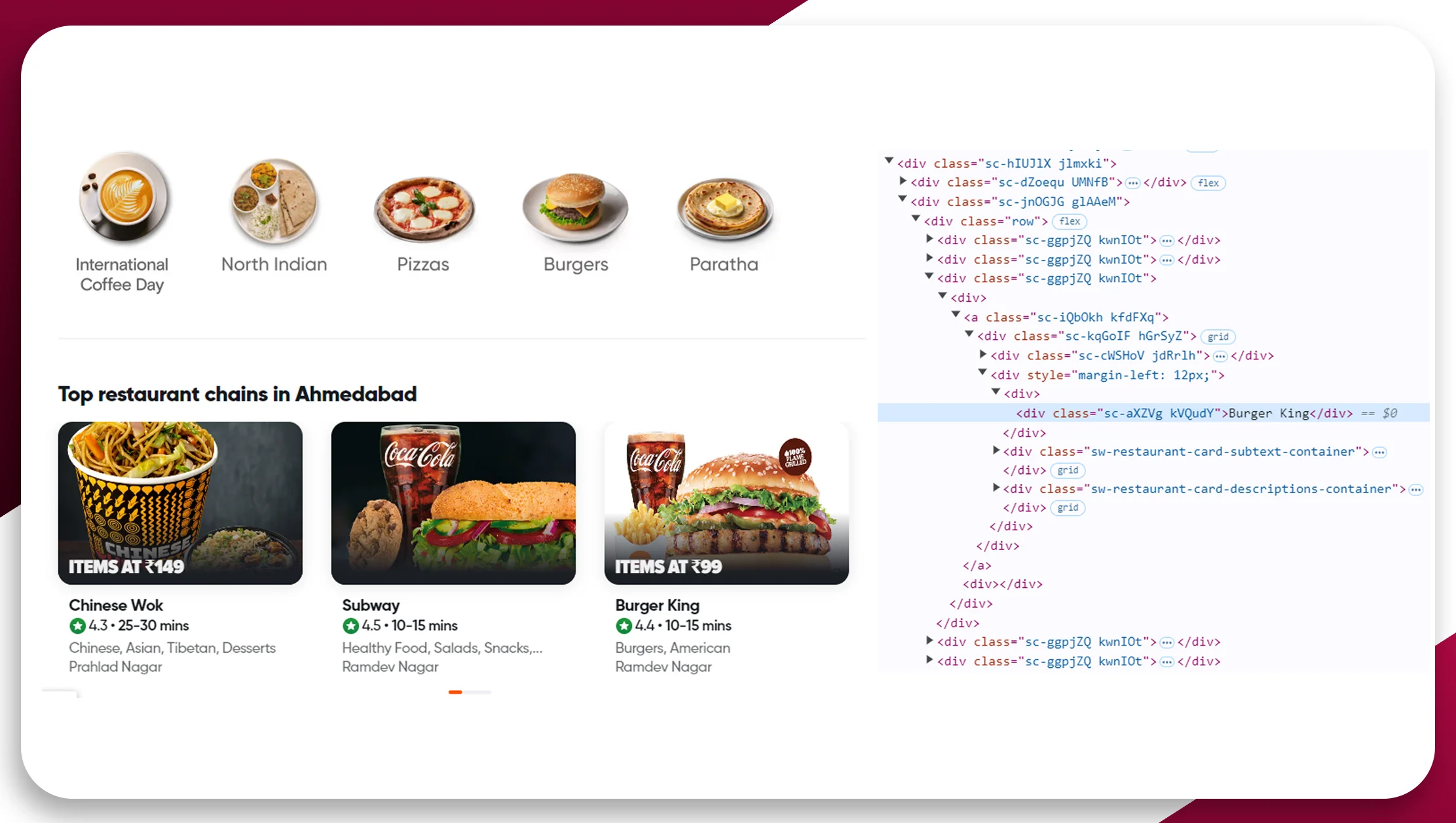

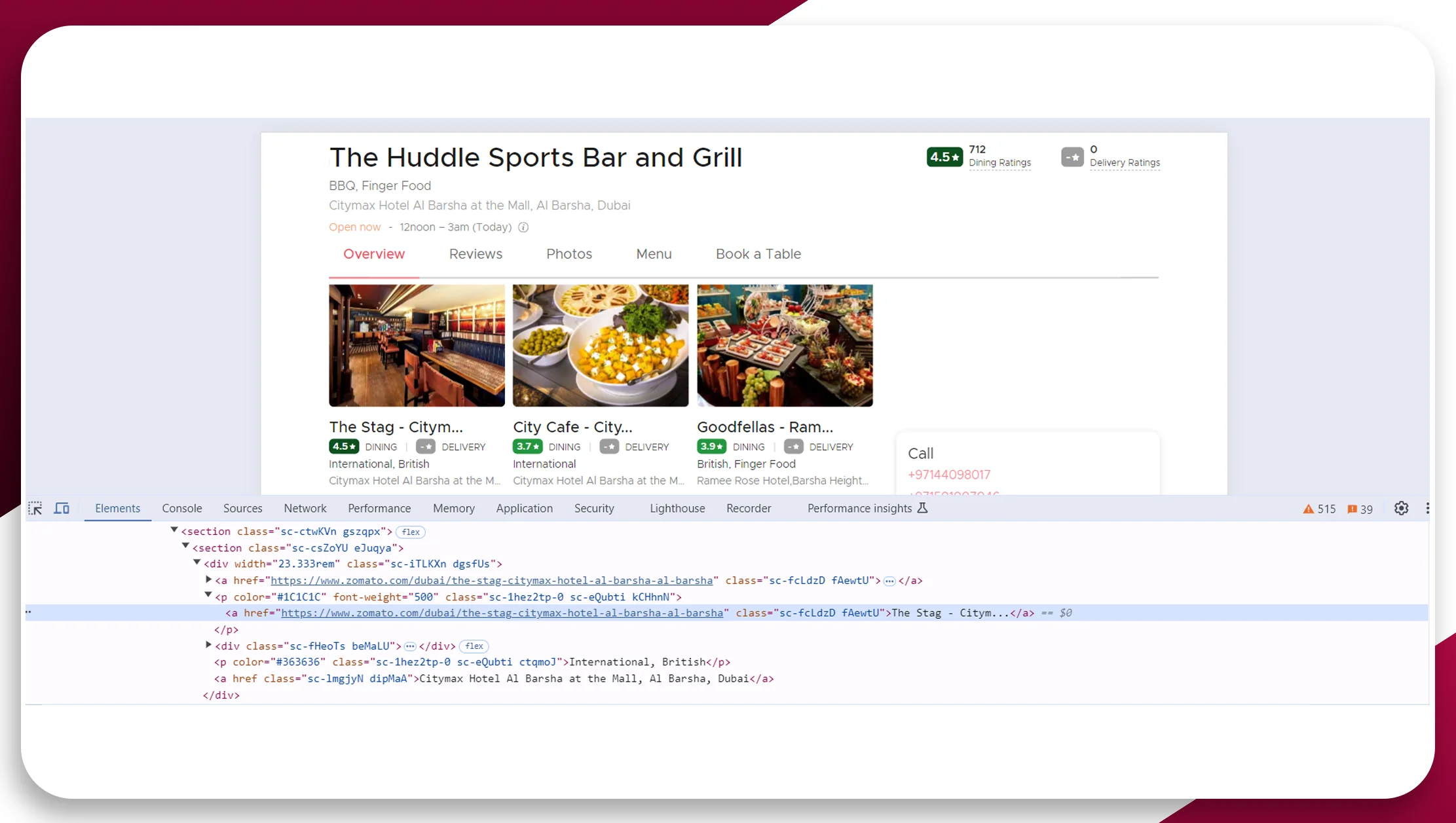

Steps to Scrape Food Delivery Platforms1. Understand the Website Structure

Before you start scraping, inspect the website's HTML structure. Use browser developer tools to identify the elements containing the needed data, such as restaurant names, menu items, prices, and customer reviews.

2. Set Up Your EnvironmentInstall the necessary libraries. You can do this using pip:

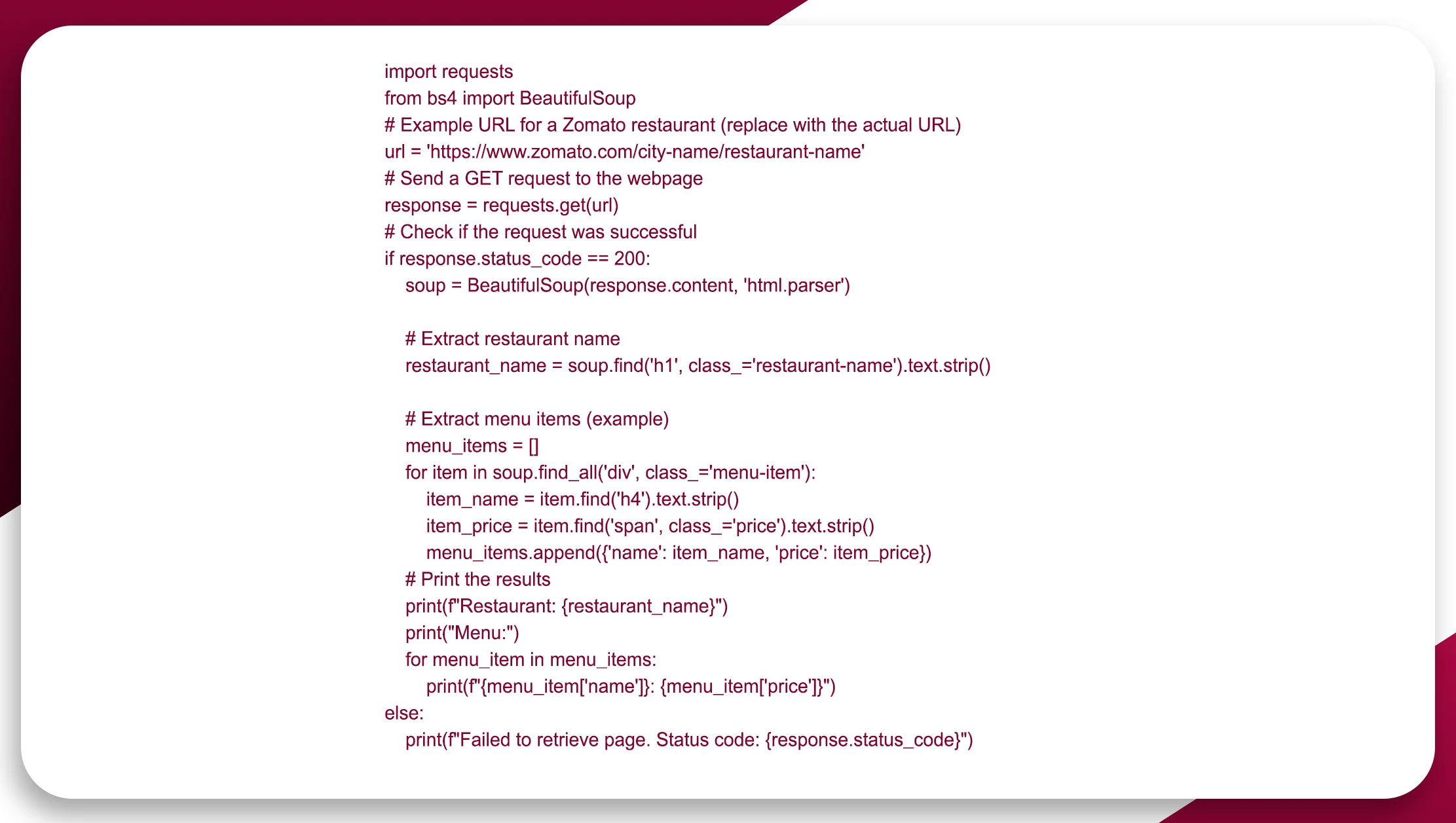

pip install requests beautifulsoup4 scrapy selenium 3. Write the Scraping Code

Here's an example of how to scrape data from Zomato using BeautifulSoup and requests:

4. Handle Pagination

Most food delivery platforms display data across multiple pages. Make sure your code can navigate through Pagination to scrape all relevant data.

5. Explore API Options

Some food delivery platforms offer APIs for easier data access. Consider using these APIs for structured data retrieval instead of scraping HTML if available.

6. Adhere to Legal Guidelines

Always review each platform's terms of service before scraping. Ensure compliance with their rules to avoid any legal issues.

YOU ARE READING

How to Scrape Data from Multiple Food Delivery Platforms: A Complete Guide

Short StoryScrape data from multiple food delivery platforms, including menus, prices, ratings, and availability, for seamless analysis, comparison, and market insights.

How to Scrape Data from Multiple Food Delivery Platforms: A Complete Guide

Start from the beginning